Build an AI CEO

for Your SaaS

Never sleeps. Always shipping. Bug fixes before customers can refresh their inbox.

€10k to build vs €200k/year to hire. No equity demands. No ego. Just execution—on-prem AI that fixes bugs, ships features, and deploys from Jira or WhatsApp.

See the ROI→> The Token Economics Problem

API costs are rising ~15% annually. Your "affordable" AI spend today becomes a budget crisis tomorrow.

The Smart Alternative

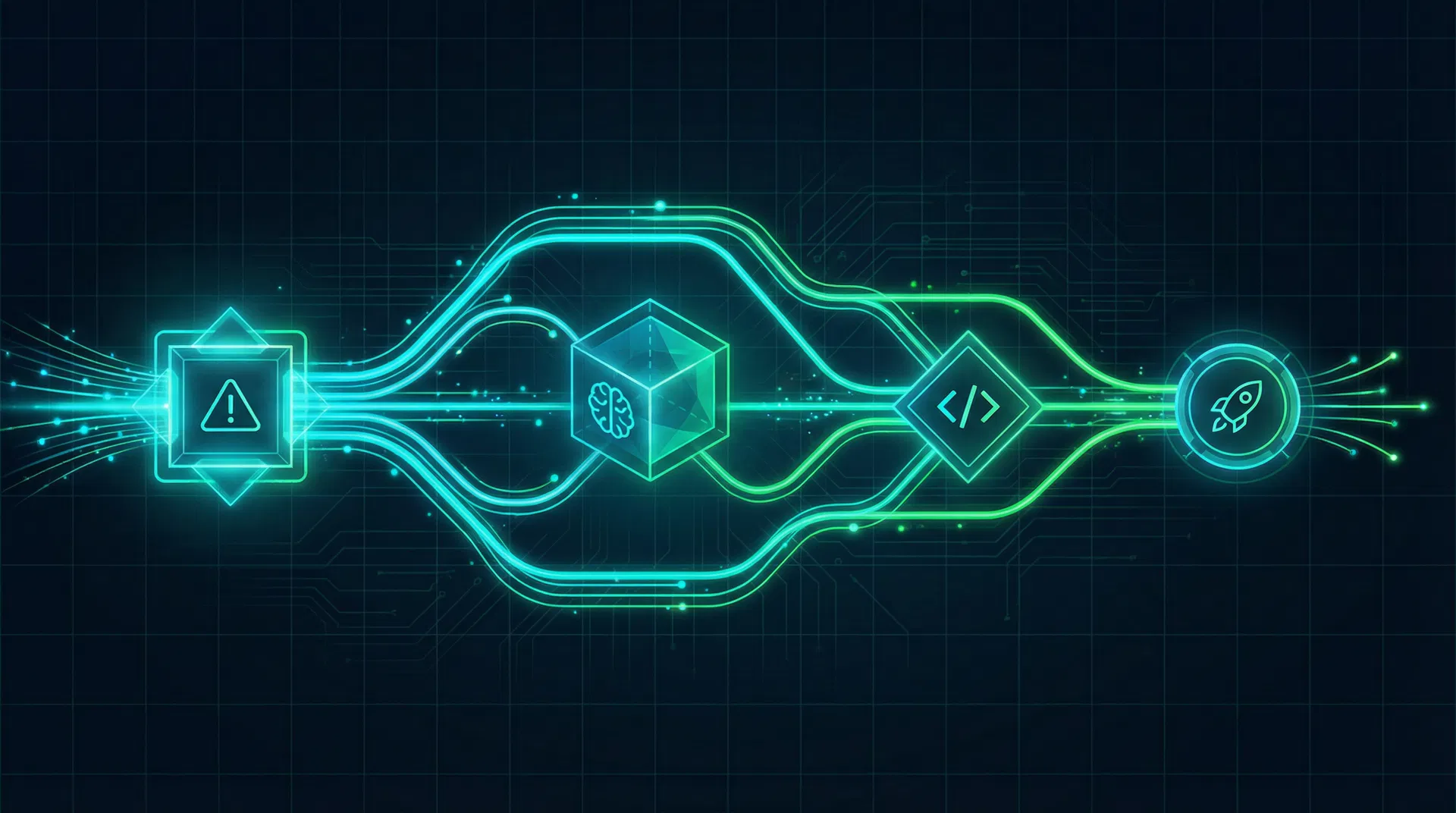

> How It Works

From bug detection to production deployment in minutes, not hours.

Detect

Sentry alert or Jira ticket triggers the system

Analyze

AI reads codebase, understands context

Fix

Generates code + tests, creates PR

Deploy

Auto-deploy to staging, CEO approves prod

> What Works Exceptionally Well

90%+ success rate on these task types with on-prem models (€0 per request)

Bug Fixes

Known code paths, missing null checks, type errors

Test Generation

Comprehensive tests from existing code patterns

Refactoring

Extract functions, rename variables, clean up

Documentation

JSDoc, README sections, API docs generation

Architecture Changes

Cross-file refactors with Claude 3.5 Sonnet

Security Reviews

Audit middleware, find vulnerabilities

> Guardrails Matter

Models lose context. Costs spiral. Output gets inconsistent. Without guardrails, AI becomes a liability. With thoughtful orchestration, worktrees, and your SOPs baked in—AI becomes reliable.

Models Lose Context

Without worktrees and context isolation, models mix up unrelated issues. Fix one bug, break another. Your 200k codebase becomes a tangled mess in the model's "memory."

Costs Spiral Out of Control

One infinite loop of API calls. One bug in auto-retry logic. Wake up to a €10k bill because there's no hard budget limit or request throttling.

Output Ignores Your SOPs

Your team has architecture guidelines, coding standards, naming conventions—your secret sauce. Generic AI doesn't know them. Output requires heavy editing to match your style.

Why Guardrails Transform AI from Liability to Asset

Watch Guardrails Prevent a €5k Mistake

> Smart Model Selection

Be smart about your AI spend. Use on-prem models as your workhorse—they handle 95% of tasks at €0 cost. Deploy Claude/GPT as your strike force for the complex 5%.

> The ROI

398% return over 3 years. Pays for itself in 12 weeks.

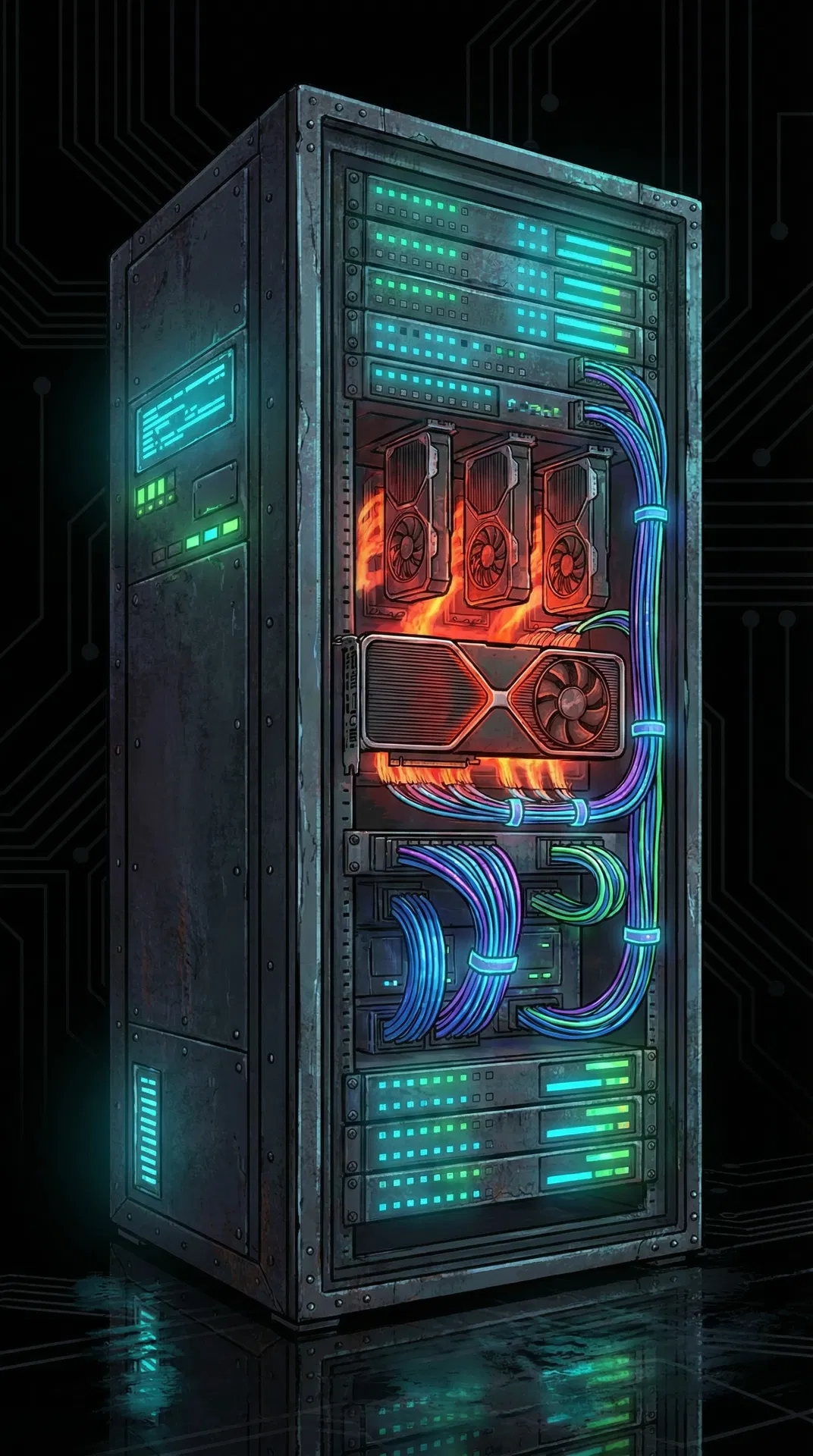

> The Hardware Stack

€10k one-time investment for unlimited on-prem AI inference. Runs on Hetzner, OVH, or your own servers.

> Why Blueprint?

The only platform that goes from Jira to production without touching your IDE.

| Feature | Blueprint | Copilot | Cursor | DIY |

|---|---|---|---|---|

| Zero-touch deploy | ✓ | ✗ | ✗ | ✗ |

| On-prem option | ✓ | ✗ | ✗ | ⚠ |

| WhatsApp/Telegram control | ✓ | ✗ | ✗ | ✗ |

| Model choice per task | ✓ | ✗ | ✗ | ⚠ |

| Jira → PR → Deploy | ✓ | ✗ | ✗ | ⚠ |

| Auto-generated tests | ✓ | ⚠ | ⚠ | ⚠ |

> CEO Dashboard

Deploy from anywhere. Approve from WhatsApp. Full visibility without touching code.

> 6-Week Implementation

From hardware setup to full team rollout in 6 weeks.

Hardware & Infrastructure

- →Ubuntu 22.04 + NVIDIA drivers

- →vLLM inference server

- →PostgreSQL, Qdrant, Redis

Control Plane

- →Temporal orchestration

- →Model gateway + router

- →Policy engine + audit logs

IDE Integration

- →VS Code / Cursor extension

- →Model picker UI

- →Cost tracking dashboard

Automation & Safety

- →Code → tests → PR pipeline

- →CI/CD integration

- →Security scanning

External Integrations

- →Jira webhooks

- →WhatsApp / Telegram bot

- →Git hooks

Rollout & Training

- →Documentation

- →Team training

- →Gradual rollout

> FAQ

Common questions from dev teams evaluating AI CEO

What hardware do I actually need?

Two RTX 4090s (48GB total VRAM), 256GB RAM, 4TB NVMe. Total ~€10k. Runs Qwen-32B and Llama-70B locally. You can rent equivalent from Hetzner or OVH for ~€300/month.

How is this different from Cursor or Copilot?

Cursor and Copilot are IDE assistants—you still type, review, and deploy. AI CEO is zero-touch: Jira ticket → PR → tests → staging → your approval → prod. No IDE required.

What's the actual success rate?

92% for bug fixes, 88% for test generation, 95% for refactoring. Complex architecture changes drop to 78%—that's when we route to Claude strike force instead of workhorse models.

Can I use this with my existing CI/CD?

Yes. AI CEO creates standard PRs with conventional commits. Works with GitHub Actions, GitLab CI, CircleCI, Jenkins. Your existing pipelines run unchanged.

What about security and secrets?

Context filtering blocks .env files automatically. Semgrep scans all generated code. Your codebase never leaves your infrastructure—100% on-prem option available.

How long does implementation take?

6 weeks from hardware setup to full team rollout. Week 1-2: infrastructure. Week 3-4: integrations. Week 5-6: training and gradual rollout.

€10k investment

→ €135k saved over 3 years

The tools are ready. The models are good enough. The hardware is affordable.

What are you waiting for?